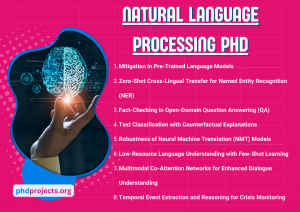

Natural Language Processing PhD

Natural Language Processing (NLP) domain plays an important role in the process of accessing the computer to interpret, evaluate and manipulate the human language. Let us be your trusted guide on your journey to professional success! So share with us all your requirements along with base papers and reference papers about your concept we will assist you in all your research needs. By this article, some of the research-worthy areas in NLP are provided by us:

- Bias Detection and Mitigation in Pre-Trained Language Models

- Problem Statement:

- It results in bias and improper anticipations in consequent tasks, as the pre-trained language models like T5 or GPT-4 represent unfairness towards socioeconomic classes, certain genders or races.

- In order to mitigate this unfair data while sustaining the model performance, this project intends to detect the range and types of unfairness in pre-trained models.

- Research queries:

- How unfairness is efficiently identified and evaluated in pre-trained language models?

- What adversarial or fairness-aware training techniques can mitigate biases without crucially influencing the performance?

- Zero-Shot Cross-Lingual Transfer for Named Entity Recognition (NER)

- Problem Statement:

- Because of the absence of annotated data, the latest NER (Named entity Recognition) models are complex to generalize with minimal-resource languages.

- In resource-scarce languages, use pre-trained multilingual models to design a zero-shot cross-lingual transfer technique which has the capacity to detect entities.

- Research queries:

- How cross-lingual representations are productively organized for zero-shot NER tasks?

- What data augmentation and unsupervised learning methods enhance zero-shot NER performance?

- Fact-Checking in Open-Domain Question Answering (QA)

- Problem Statement:

- Due to the lack of rapid- checking algorithms, the open-domain QA systems basically provide authentic inaccurate answers.

- To examine the answers which formulate through domain QA models, this project seeks to create a fact-checking model which synthesizes knowledge graphs and other external sources.

- Research queries:

- How can knowledge graphs efficiently synthesize into open-domain QA models for fact authentication?

- What tactics can identify and point out the possible improper answers for sufficient examination?

- Explainable Text Classification with Counterfactual Explanations

- Problem Statement:

- As a result of their complications, it makes it difficult for consumers to interpret their decision-making process and text classification models are typically noticed as black boxes.

- For the purpose of emphasizing main decision determinants, develop inaccurate explanations by modeling interpretable text classification models.

- Research queries:

- What explainability methods can productively detect the decision-impacting words in classification programs?

- How can counterfactual descriptions develop user interpretation and reliability in NLP models?

- Robustness of Neural Machine Translation (NMT) Models Against Adversarial Attacks

- Problem Statement:

- The input sentence is implicitly disturbed, as NMT models are susceptible to adversarial assault which handles transcriptions.

- As a means to enhance their robustness, effective training tactics has to be enhanced and in opposition to adversarial assaults, this research mainly focuses on assessing the strength of NMT (Neural Machine Translation) models.

- Research queries:

- How do various types of adversarial assaults impact NMT model performance?

- What adversarial training tactics can efficiently enhance the resilience of NMT models?

- Low-Resource Language Understanding with Few-Shot Learning

- Problem Statement:

- Considering the insufficiency of annotated training data, many of the NLP models are failed in resource-scarce languages.

- To develop language interpretation and transcription in minimal-resource languages, this study intends to investigate few-shot learning techniques.

- Research queries:

- What transfer learning techniques are very efficient for few-shot learning in minimal-resource languages?

- How can multilingual embeddings be deployed for impactful few-shot learning?

- Knowledge Graph Construction and Reasoning for Domain-Specific Applications

- Problem Statement:

- Authentic extraction of entities and relations from unorganized text is specifically needed for constructing a field-specific knowledge graph. Through these developed knowledge graphs, consider the interpretation crucially.

- For specific fields such as law, finance or healthcare, design knowledge graph configuration and reasoning mechanisms, as it is the main concentration of this research.

- Research queries:

- What unsupervised or weakly supervised techniques enhance entity and relation extraction in specific fields?

- How can graph neural networks be efficiently implemented for field-specific knowledge graph reasoning?

- Multimodal Co-Attention Networks for Enhanced Dialogue Understanding

- Problem Statement:

- In a multimodal environment, the interpretability of communication is constrained due to the insufficient capability of latest dialogue systems in encompassing audio and video context.

- Advance dialogue interpretation through modeling a multimodal co-attention network which synthesizes audio, images and text.

- Research queries:

- How can co-attention techniques arrange textual, visual, and auditory data dynamically in dialogue systems?

- What data augmentation methods can enhance the resilience of multimodal dialogue models?

- Temporal Event Extraction and Reasoning for Crisis Monitoring

- Problem Statement:

- For the process of developing time bounds and interpreting the evolution of emergencies, there is a necessity for authentic extraction of temporal conditions and proper reasons about their correlations.

- To generate event time bounds for addressing the crisis, this project intends to model temporal event extraction and reasoning techniques.

- Research queries:

- What techniques can derive and regularize temporal expressions and conditions from disaster-relevant texts?

- How can event reasoning techniques efficiently understand multi-hop temporal event relationships?

- Abstractive Summarization of Research Papers for Literature Review Automation

- Problem Statement:

- It results basically in prejudiced or partially completed literature reviews, as it takes sufficient time for analyzing huge amounts of research papers automatically.

- Generate literature reviews through formulating a brief outline of research papers by establishing an effective abstractive summarization model.

- Research queries:

- How can transformer-based summarization models be optimized for the process of outlining extensive research papers?

- What evaluation metrics can evaluate the potential and authenticity of literature review summaries?

What are some research projects that can be done in NLP?

As the NLP (Natural Language Processing) domain emerges often with innovative methods and algorithms, it provides vast opportunities for scholars, researchers to perform extensive studies. Here, we propose numerous compelling research topics on NLP:

- Few-Shot Learning for Cross-Lingual Named Entity Recognition (NER)

- Explanation: Considering the resource-constrained languages with limited annotated data, design models that must be proficient in detecting entities.

- Area of Focus:

- It mainly concentrates on application of multilingual models such as XLM-R or mBERT.

- Make use of domain adaptation and few-shot learning techniques.

- Problems:

- Managing language-specific terminology and entity unclearness might be complicated.

- Across various languages, it is difficult to coordinate with entity types.

- Probable Datasets:

- CoNLL-2003 (English) and WikiAnn (multilingual NER).

- Neural Text Simplification for Legal and Medical Documents

- Explanation: For various consumers, enhance availability to clarify the sophisticated texts by creating frameworks.

- Area of Focus:

- Transformer models such as BART or T5 can be implemented.

- It includes interpretability metrics and domain-specific characteristics.

- Problems:

- Conducting a balance between clarity and medical or ethical precision is very significant.

- There is a necessity for efficiently assessing the capacity of text simplification.

- Probable Datasets:

- I2b2 Clinical Notes and CUAD (Contract Understanding Atticus Dataset).

- Robustness and Adversarial Attacks in Sentiment Analysis

- Explanation: Particularly for sentiment analysis models, carry out an extensive exploration on adversarial assaults and defense techniques.

- Area of Focus:

- To enhance model resilience, execute adversarial training methods.

- Identify and prohibit adversarial assaults through modeling defense algorithms.

- Problems:

- Practical adversarial models are complex to develop for deceiving the sentiment analysis frameworks.

- It needs to balance adversarial resilience with categorization performance.

- Probable Datasets:

- Yelp Reviews, Sentiment140 and IMDb Reviews.

- Fact-Checking in Open-Domain Question Answering (QA)

- Explanation: Utilize knowledge graphs and other sources to develop a QA (Question Answering) system which examines the formulated answers.

- Area of Focus:

- This research highlights synthesizing knowledge graphs like Wikidata into open-domain QA models.

- For the purpose of assessing the facts, apply RAG (Retrieval-Augmented Generation).

- Problems:

- Coordinate text with organized knowledge bases should be efficiently carried out.

- The main concern is detecting inaccurate answers and analyzing authentic precision.

- Probable Datasets:

- ComplexWebQuestions, Natural Questions and WebQuestionsSP.

- Explainable Neural Machine Translation

- Explanation: To interpret their translation solutions, generate an interpretable NMT (Neural Machine Translation) models.

- Area of Focus:

- In order to interpret model anticipations, make use of attention mechanisms or SHAP/LIME.

- Detect decision-concerning words by executing contrastive learning algorithms.

- Problems:

- It requires balancing intelligibility with the performance of the translation process.

- Beyond multiple languages, it is crucial to analyze efficiency of interpretation.

- Probable Datasets:

- IWSLT, Europarl and WMT Translation Tasks.

- Empathetic Response Generation in Conversational AI

- Explanation: For developing sympathetic responses in mental health guidance, create dialogue systems.

- Area of Focus:

- The main focus of the research is optimized pre-trained dialogue models such as GPT-4 or DialoGPT.

- As regards sympathetic response formulation, establish reinforcement learning methods.

- Problems:

- Especially for mental health support, balance sympathetic responses with sensibility.

- In conversational data, verifying the ethical application and privacy might be complicated.

- Probable Datasets:

- DAIC-WOZ, Empathetic Dialogues and Mental Health Reddit Dataset.

- Open-Source Code Generation with GPT-Like Models

- Explanation: Use extensive-scale pre-trained models such as GPT-4 to design code generation frameworks.

- Area of Focus:

- On publicly-accessible code repositories, implement pre-trained and advanced models.

- Execute debugging tools to rectify errors and neural code summarization.

- Problems:

- In code repositories, managing the irregular naming conventions could be difficult.

- The authenticity and potential of formulated code must be estimated.

- Probable Datasets:

- CodeSearchNet, Programming Puzzles (Python) and GitHub Public Repos.

- Abstractive Summarization of Scientific Literature

- Explanation: In order to formulate a brief outline of scientific papers for literature analysis, design capable models.

- Area of Focus:

- Implement transformer models such as PEGASUS, BART or T5.

- It includes citation networks and field-specific keywords.

- Problems:

- Here, the major challenge is keeping up with consistency and authentic accuracy of summary.

- On literature reviews, it might be difficult to assess outline quality and implications.

- Probable Datasets:

- PubMed Articles, ACL Anthology and ArXiv Papers.

- Neurosymbolic NLP Models for Logical Reasoning

- Explanation: For logical interpretation in NLP programs, integrate neural networks with logical reasoning.

- Area of Focus:

- To encompass logical reasoning, execute neuro symbolic models.

- Neuro symbolic models have to be applied to NLI (Natural Language Inference) programs.

- Problems:

- Regarding sophisticated tasks, it is crucial to assess symbolic reasoning.

- It needs to be effortlessly synthesizing neural and logical elements.

- Probable Datasets:

- Logical NLI (LoNLI), SNLI and MultiNLI.

- Temporal Event Extraction and Timeline Construction

- Explanation: As a means to develop time bound for disaster observation, build temporal event extraction models.

- Area of Focus:

- From hazardous-related tasks, it mainly emphasizes in deriving temporal expressions.

- To interpret multi-hop temporal event correlations, apply reasoning mechanisms.

- Problems:

- The multi-event intersection and temporal indefiniteness required to be addressed efficiently.

- Considering the developed timebound, it must estimate the capacity and extensiveness.

- Probable Datasets:

- CrisisLex, TempEval and TimeBank.

- Bias Detection and Mitigation in NLP Models

- Explanation: Beyond various population groups, verify authenticity through identifying and reducing biases in NLP models.

- Area of Focus:

- For evaluating unfairness in pre-trained models, create datasets.

- To mitigate unfairness, utilize fairness-aware training methods,

- Problems:

- Several kinds of biases should be specified and evaluated.

- Without influencing the model performance, it should decrease unfairness.

- Probable Datasets:

- Gender Bias Evaluation Dataset, StereoSet and WINO Bias.

- Sentiment Analysis for Financial Market Prediction

- Explanation: Deploy social media and financial reports to forecast market activities by designing sentiment analysis models.

- Area of Focus:

- Use pre-trained language models to execute sentiment analysis.

- Enhance the clarity of anticipations through including market indicators.

- Problems:

- In accordance with financial parameters and market patterns, sentiment scores must be coordinated.

- On the basis of financial choices, assess the clarity of forecastings and its implications.

- Probable Datasets:

- Reuters, Twitter Financial Sentiment Dataset and Bloomberg.

- Multimodal Co-Attention Networks for Enhanced Dialogue Understanding

- Explanation: To enhance context identification in dialogue systems, integrate text, audio and image.

- Area of Focus:

- By means of arranging audio, text and images, create co-attention networks.

- For sympathetic dialogue formation, implement multimodal frameworks.

- Problems:

- Considering the dialogue applications, it is difficult to address audio and lacking modality data.

- Be sure of proper modality coordination and fusion.

- Probable Datasets:

- Friends TV Series Dataset, CMU-MOSI and MELD.

- Retrieval-Augmented Generation (RAG) for Domain-Specific Question Answering (QA)

- Explanation: Particularly for domain-specific questions, extract and develop answers by generating a QA system.

- Area of Focus:

- Apply pre-trained models such as T5 to execute RAG (Retrieval-Augmented Generation).

- In order to enhance field-specific answer generation, improve the retrieval tactics.

- Problems:

- The derived documents and question framework needs to be coordinated effectively.

- Based on formulated answers, assuring authentic accuracy is very crucial.

- Probable Datasets:

- BioASQ, CUAD (Contract Understanding Atticus Dataset) and SQuAD.

- Neural Text Generation with Style Transfer for Creative Writing

- Explanation: For the process of story-telling, create text generation models which can be portable to various styles.

- Area of Focus:

- Regarding style transfer, apply T5, GPT-4 or transformer-based models.

- To classify among writing styles, execute contrastive learning.

- Problems:

- It is required to balance innovation with grammatical consistency in storytelling.

- Considering the developed stories, it is significant to assess originality and stylistic quality.

- Probable Datasets:

- ROCStories, tingPrompts and BookCorpus.

Natural Language Processing PhD

Discover the latest and most effective career-enhancing concepts right here! Stay connected with us to unlock a world of original ideas in Natural Language Processing that will propel your career to new heights. Whether you’re pursuing a PhD or simply looking to expand your knowledge, we’ve got you covered with a wide range of topics for your research project. Plus, with our strong affiliations with over 200 prestigious SCI and SCOPUS indexed journals, publishing your paper will be a breeze.

- Mining peripheral arterial disease cases from narrative clinical notes using natural language processing

- Peeking behind the page: using natural language processing to identify and explore the characters used to classify sea anemones

- Improving intelligence through use of Natural Language Processing. A comparison between NLP interfaces and traditional visual GIS interfaces.

- ISO reference terminology models for nursing: Applicability for natural language processing of nursing narratives

- Automated chart review for asthma cohort identification using natural language processing: an exploratory study

- A vocabulary development and visualization tool based on natural language processing and the mining of textual patient reports

- Performance of a Natural Language Processing Method to Extract Stone Composition From the Electronic Health Record

- Parsimonious memory unit for recurrent neural networks with application to natural language processing

- Natural Language Processing (NLP) – A Solution for Knowledge Extraction from Patent Unstructured Data

- Semantic similarity of short texts in languages with a deficient natural language processing support

- A psycholinguistically and neurolinguistically plausible system-level model of natural-language syntax processing

- Applying a natural language processing tool to electronic health records to assess performance on colonoscopy quality measures

- A Sinhala Natural Language Interface for Querying Databases Using Natural Language Processing

- Building a Natural Language Processing Model to Extract Order Information from Customer Orders for Interpretative Order Management

- Intelligent Approaches for Natural Language Processing for Indic Languages

- Research on Web monitoring system based on natural language processing

- Integration of speech recognition and natural language processing in the MIT VOYAGER system

- Class Diagram Extraction from Textual Requirements Using Natural Language Processing (NLP) Techniques

- Natural Language Grammar Induction of Indonesian Language Corpora Using Genetic Algorithm

- A rule-based grapheme-phone converter and stress determination for Brazilian Portuguese natural language processing