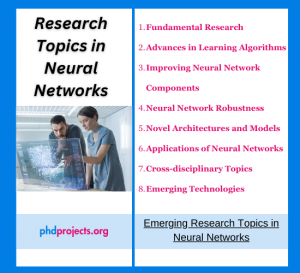

Research Topics in Neural Networks

In artificial intelligence and machine learning, Neural Networks-based research is a wide and consistently emerging field. The concepts include theoretical basics, methodological enhancements, novel frameworks and a broad range of applications. All the members in phdprojects.org are extremely cooperative and work tirelessly to get original and novel topics on your area. We offer dedicated help to provide meaningful project. Below, we discuss about various latest and evolving research concepts in neural networks:

Fundamental Research:

- Neural Network Theory: Our research interprets the neural network’s in-depth theoretical factors such as ability, generalization capabilities and the reason for its robustness among several tasks.

- Optimization Methods: To efficiently and appropriately train the neural networks, we create novel optimization techniques.

- Neural Architecture Search (NAS): Machine learning assists us to discover the best network frameworks and effectively automate the neural network development process.

- Quantum Neural Networks: We examine how quantum techniques improve efficiency of neural networks and analyze the intersection of neural networks and quantum computing.

Advances in Learning Techniques:

- Meta-Learning: In meta-learning, our model learns how to learn and enhances its efficiency with every task with remembering the previously gained skills.

- Federated Learning: By keeping the data confidentiality and safety, we explore the training of distributed neural networks throughout various devices.

- Reinforcement Learning: To accomplish the aim, our approach enhances the methods that enable models to decide consecutive decisions by communicating with their platforms.

- Few-shot or Semi-supervised Learning: This technique allows our neural network models to learn from a limited labeled dataset added with a huge unlabeled dataset.

Enhancing Neural Network Components:

- Activation Functions: To enhance the efficiency and training variations of neural networks, we investigate various activation functions.

- Dynamic & Adaptive Networks: This is about the development of neural networks that alter their design and dimension at the training process based on the difficult nature of the task.

- Regularization Methods: To avoid overfitting issues and enhance the neural network’s generalization, we build novel regularization techniques.

Neural Network Efficiency:

- Explainable AI (XAI): To make our model more clear and reliable, we improve the understandability of neural network decisions.

- Adversarial Machine Learning: Our research explores the neural network’s safety factors, specifically its efficiency against adversarial assaults and creates protection.

- Fault Tolerance in Neural Networks: Make sure whether our neural networks are robust even its aspects fail or data is modified.

New Architectures & Frameworks:

- Capsule Networks: Approaching our capsule networks framework which intends to address the challenges of CNNs including its inefficiency in managing spatial hierarchies.

- Spiking Neural Networks (SNN): We create neural frameworks that nearly copies the processing way of biological neurons and effectively guides to more robust AI frameworks.

- Integrated frameworks: Our project integrates neural networks with statistical frameworks or machine learning to manipulate the effectiveness of both.

Neural Networks Applications:

- Clinical Diagnosis: In clinical imaging and diagnosis such as radiology, pathology and genomics, we enhance the neural network’s utilization.

- Climate Modeling: Neural networks support us to interpret the complicated climatic systems and improve the climate forecasting’s accuracy,

- Automatic Systems: Our project intends to create neural networks to utilize in automatic drones, robots, and self-driving cars.

- Neural Networks in Natural Language processing (NLP): For various tasks such as summarization, translation, question-answering and others, we employ the latest language frameworks.

- Financial Modeling: Neural networks helpful for us to forecast market trends, evaluate severity and automate business.

Cross-disciplinary Concepts:

- Bio-inspired Neural Networks: To develop more robust and effective neural network methods, we observe motivations from neuroscience.

- Neural Networks for Social Good: For overcoming social limitations like disaster concerns, poverty consideration, or monitoring disease spread, our research uses a neural network approach.

Evolving Approaches:

- AI for Creativity: For innovative tasks like creating arts, music, development and writing, we make use of neural networks.

- Edge AI: The process of neural network optimization helps us to effectively execute our model on edge-based devices such as IoT devices or smartphones with a small amount of computational energy.

It is very significant for us to think about the accessible resources, our own knowledge and possible project effects while selecting research concepts. A novel research approach emerges through the association with business, integrative community and institution and it also offers potential applications for our project.

What specific neural network architectures are being explored in the research thesis?

Neural Network Architecture operates by using organized layers to change input data into important depictions. The original layer obtains the unprocessed data, which then undergoes mathematical calculations within one or multiple hidden layers.

Convolutional Neural Networks (CNN) outshine in image recognition tasks, while Recurrent Neural Networks (RNN) prove superior performance in categorization calculation.

- Global Asymptotical Stability of Recurrent Neural Networks With Multiple Discrete Delays and Distributed Delays

- An Improved Algebraic Criterion for Global Exponential Stability of Recurrent Neural Networks With Time-Varying Delays

- Finding Features for Real-Time Premature Ventricular Contraction Detection Using a Fuzzy Neural Network System

- Improved Delay-Dependent Stability Condition of Discrete Recurrent Neural Networks With Time-Varying Delays

- Experiments in the application of neural networks to rotating machine fault diagnosis

- Flash-based programmable nonlinear capacitor for switched-capacitor implementations of neural networks

- Polynomial functions can be realized by finite size multilayer feedforward neural networks

- Convergence of Nonautonomous Cohen–Grossberg-Type Neural Networks With Variable Delays

- Analysis and Optimization of Network Properties for Bionic Topology Hopfield Neural Network Using Gaussian-Distributed Small-World Rewiring Method

- Comparing Support Vector Machines and Feedforward Neural Networks With Similar Hidden-Layer Weights

- An artificial neural network study of the relationship between arousal, task difficulty and learning

- Flow-Based Encrypted Network Traffic Classification With Graph Neural Networks

- Deriving sufficient conditions for global asymptotic stability of delayed neural networks via nonsmooth analysis-II

- Bifurcating pulsed neural networks, chaotic neural networks and parametric recursions: conciliating different frameworks in neuro-like computing

- Prediction of internal surface roughness in drilling using three feedforward neural networks – a comparison

- Comparison of two neural networks approaches to Boolean matrix factorization

- A new class of convolutional neural networks (SICoNNets) and their application of face detection

- The Guelph Darwin Project: the evolution of neural networks by genetic algorithms

- Training neural networks with threshold activation functions and constrained integer weights

- A commodity trading model based on a neural network-expert system hybrid